Abstract

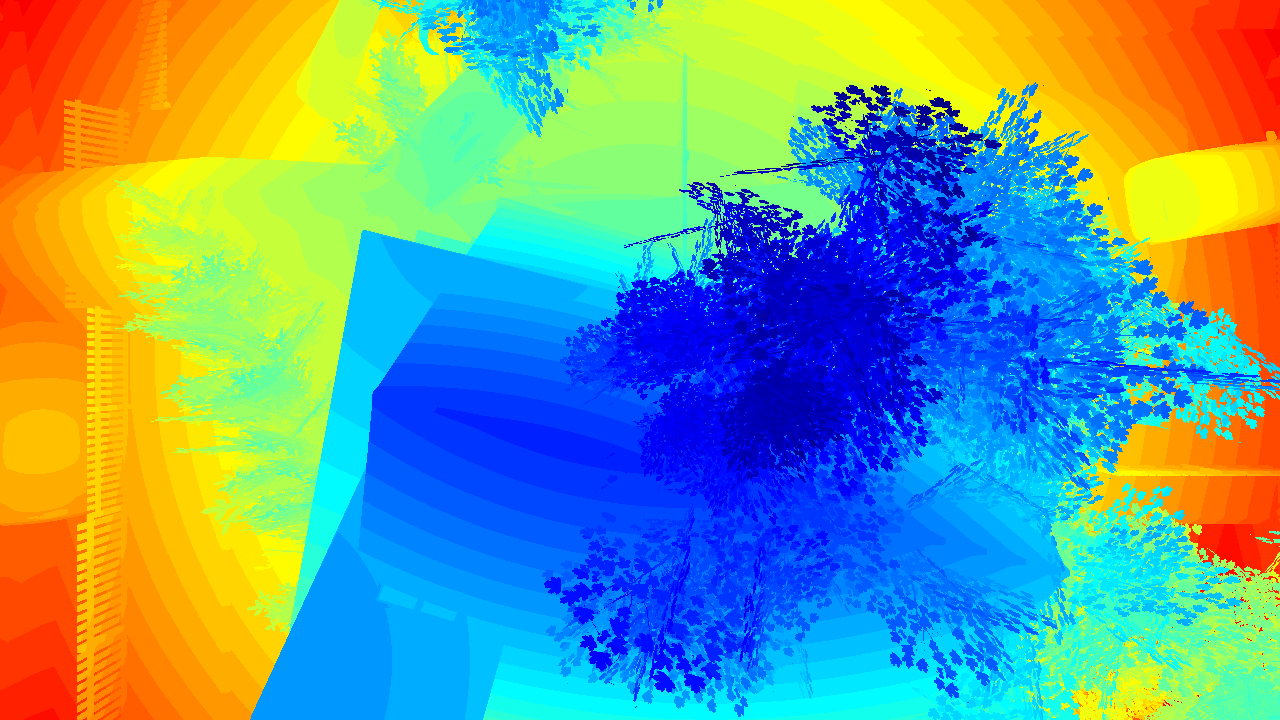

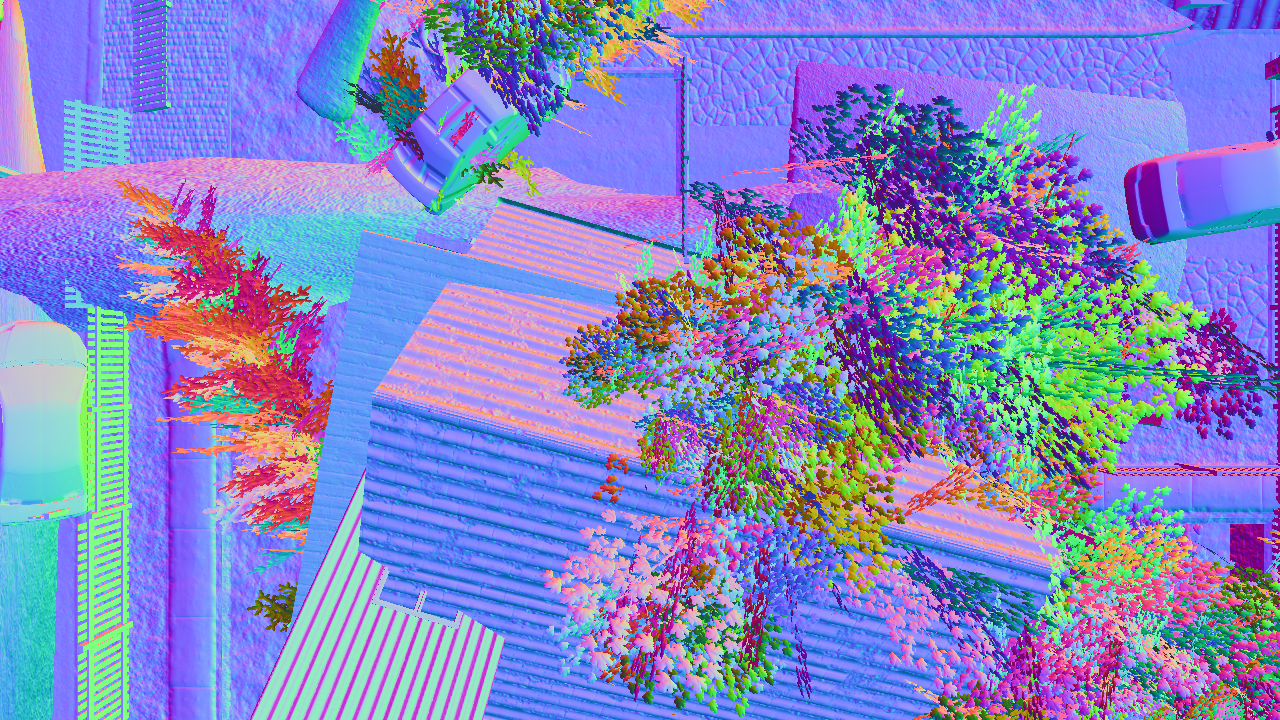

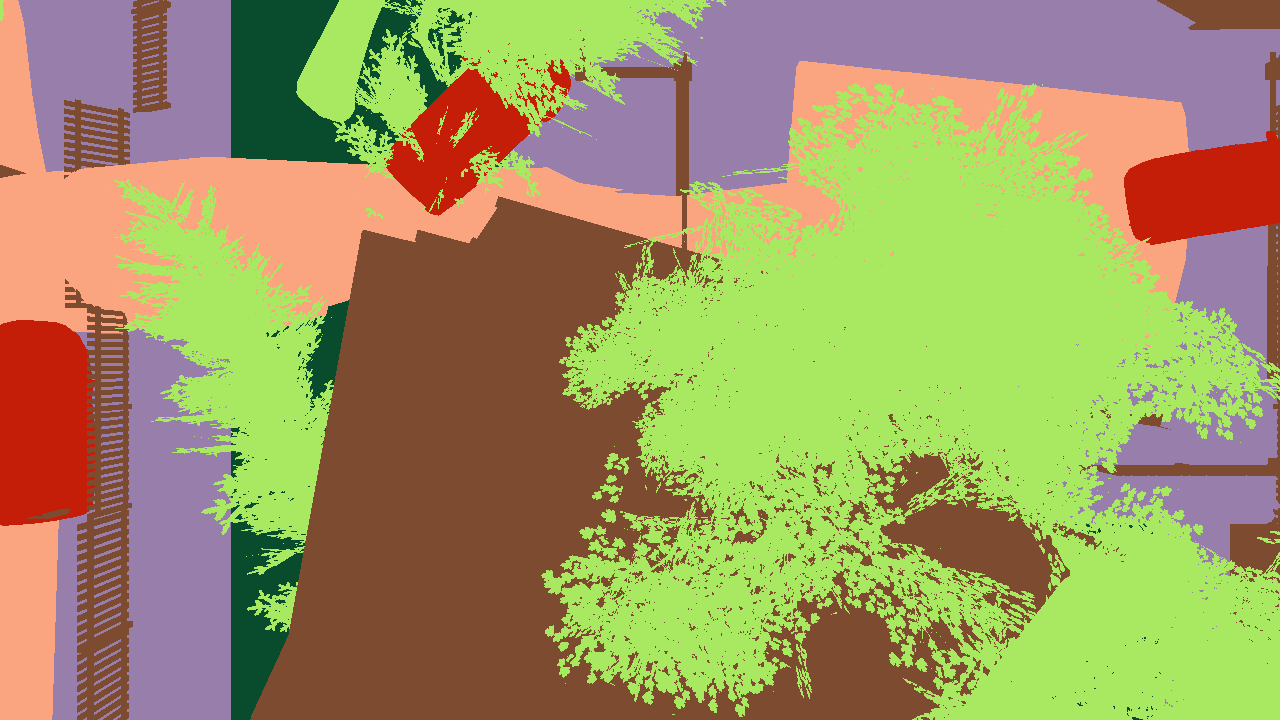

Unmanned Aerial Vehicles (UAVs) equipped with bioradars are a life-saving technology that can enable identification of survivors under collapsed buildings in the aftermath of natural disasters such as earthquakes or gas explosions. However, these UAVs have to be able to autonomously navigate in disaster struck environments and land on debris piles in order to accurately locate the survivors. This problem is extremely challenging as pre-existing maps cannot be leveraged for navigation due to structural changes that may have occurred and existing landing site detection algorithms are not suitable to identify safe landing regions on debris piles. In this work, we present a computationally efficient system for autonomous UAV navigation and landing that does not require any prior knowledge about the environment. We propose a novel landing site detection algorithm that computes costmaps based on several hazard factors including terrain flatness, steepness, depth accuracy, and energy consumption information. We also introduce a first-of-a-kind synthetic dataset of over 1.2 million images of collapsed buildings with groundtruth depth, surface normals, semantics and camera pose information. We demonstrate the efficacy of our system using experiments from a city scale hyperrealistic simulation environment and in real-world scenarios with collapsed buildings.